23 Апреля 2019

Reading time: 4 min

We recommend you to read the first part of this article if you have not done it yet. After that everything in this text will be twice as interesting for you and eight times clearer.Part 1

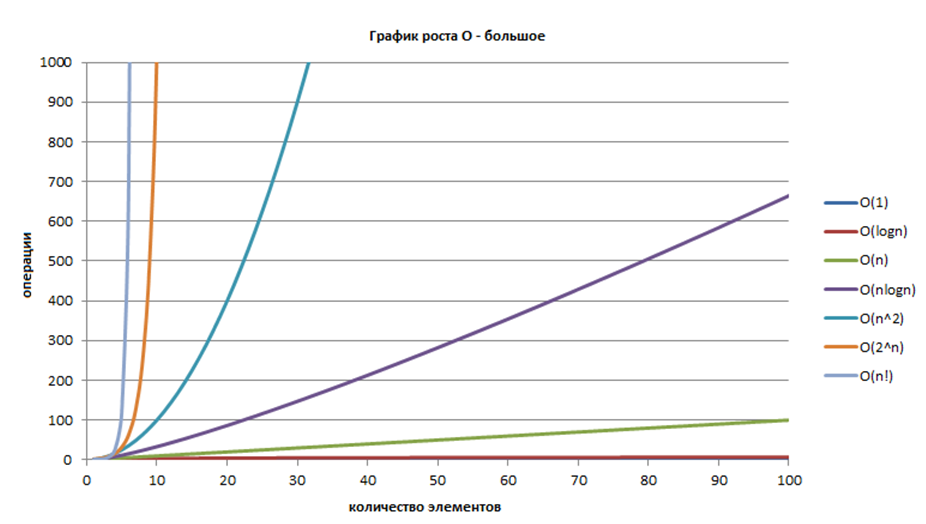

1. Algorithmic complexity

This is also called computational complexity. In universities, this concept is studied in sufficient detail, but we will discuss a short description. Algorithmic complexity means the function depends on the size of the input data and outputs the amount of work done by a certain algorithm. The amount of work, in this case, is usually measured by abstract concepts of time and space, which are called "computational resources".

How does algorithmic complexity affect development?

First, we have a variety of data structures and algorithms. All sorts of stacks, decks, trees, B-trees, hash tables are different ways of presenting the information. The developer chooses the structure depending on what operations he will use more often. So, he must perfectly understand all these data structures.

Another manifestation of algorithmic complexity is the «P versus NP» problem. It's the major unsolved problem in computer science. It asks whether every problem whose solution can be quickly verified (technically, verified in polynomial time) can also be solved quickly (again, in polynomial time). By the way, it has been keeping at the top of the list of central open problems of the theory of algorithms for over 30 years.

It is one of the seven Millennium Prize Problems selected by the Clay Mathematics Institute, each of which carries a US$ 1,000,000 prize for the first correct solution (Thank you, Wiki!).

In simple terms, the problem of equality P = NP is about this: if a positive answer to a question can be checked quickly enough, is it true that the answer to this question can be found quickly enough? So, is it really not easier to verify the solution of a problem than to find it? It is believed that if humankind can answer "Yes" to these questions, then, theoretically, it will become possible to solve many complex tasks much faster than now.

2. The complexity of the development

This problem is described as follows: the speed of development decreases as the project grows. It means that the larger the project is - the slower its development goes.

How does this problem affect work? First, as we have already mentioned, it is impossible to correctly predict the timing. Secondly, technology is rapidly changing. Thirdly, the complexity of development is the reason that projects need more and more programmers, but they do less and less work.

What do companies do in this situation? A number of companies have been able to use this aspect of complexity for their own benefit, they build a business based on the complexity of development. They do not make money by breaking deadlines. And they do not try to produce the perfect product too. These companies are constantly releasing new versions of their software, providing them to users for money.

As we have said, Agile helps developers on many projects. In this situation, it works according to the principle: "if chaos cannot be prevented, you need to lead it." And then you have to find a benefit in it and turn it into a benefit for the client.

In addition, to maintain productivity at the same level, there are certain rules called SOLID principles. If you follow these rules, you can ensure that the speed of work on the project does not decrease so quickly. The only problem is to follow these rules exactly. There are only five of them and this is definitely a special topic for conversation in the context of object-oriented programming.

3. Information complexity

It is also called Kolmogorov complexity. This concept was introduced in 1939 by the famous Soviet mathematician Andrey Kolmogorov. Briefly, this complexity can be described as follows: the information complexity of a task/object is determined by the number of characters that need to be written to solve this problem.

It means that in fact, the complexity of the problem is equal to the number of characters describing its solution.

This, in fact, is the size of the code programmers need to write to solve the problem. Of course, everyone wants to use different notations and techniques to reduce the number of characters. So, the idea was that this complexity can be reduced too.

But in fact, it has been proven that determining the informational complexity is an algorithmically unsolvable problem. For a computer, there is no such algorithm that can tell us what the minimum number of characters is necessary to solve a particular task.

What follows from this? First, it is impossible to estimate how large our applications will be. Secondly, more and more new programming languages appear. Now there are constructions that are created to reduce the number of characters in the code and make it more compact. And no one can find out when this process will stop, because no one knows how many characters are minimal to describe any operation.

Third, adherents of various programming languages regularly provoke holy wars. And we can watch the Blub paradox invented by the American programmer Paul Graham. The essence of this paradox is that a programmer who knows a certain language (in this case, the fictional language "Blub") "thinks" on it, expresses the solution to any task with its help, and the more powerful tools of another language seems to him useless, since he does not even realize how to apply it.

At the same time, the limited capabilities of "Blub" cannot become a stimulus for learning a more powerful language, because to understand this limitation, the programmer should already know another powerful language.

By the way, the larger the subject area (for which the program is written) is, the larger the task is - the greater its information complexity will be. And the process of creating a more compact code still does not keep up with the needs of the business.

So it turns out that programs are becoming more and more cumbersome, and tasks have increasing information complexity.

Let's circle back to the problem of mathematicians: there is a theory that proofs of theorems will become so long by 2075 that no-one person in his entire life will be able to understand and realize even one of them. In fact, humanity will need the help of computers that will develop these proofs.

You can draw an analogy with mathematics and assume that the programs will soon become huge too. But we cannot predict this with certainty since it is impossible to calculate their informational complexity. However, again and again, there is a need for programs that will write other programs for us, and this is machine learning in the current interpretation.

But, both in the mathematical proofs and in the software development there is one more complexity - the fourth.

4. The complexity of testing

The complexity of testing a program also grows nonlinearly and very quickly, during the increase of the input data.

There is a book called "Art of Software Testing" (by Glenford Myers, Tom Badgett, Corey Sandler), the first edition of which was published in 1979. This book provides an example of a program from almost a hundred lines of code that has 10 to 10 degrees of program execution options. If it only took 5 seconds to check each option, then, in general, the tester would take more time to do this work than the Earth exists. For human it's like an eternity.

In fact, the industry's need for software testing resources is also very high. And we have to accept the fact that each program may contain an error and all the programs do not work correctly, because we cannot prove that they are not.

History reference

Sir Charles Antony Richard Hoare (Tony Hoare), a British computer scientist, in 1969 tried to find a solution to the problem by publishing an article «An Axiomatic Basis for Computer Programming».

He attempted to axiomatize, it means to present a formal logical system with which one he could prove the correctness of programs. In the beginning, the research results were very good, as long as everyone worked with if-s, cycles, etc. But when the community turned to procedural programming, everything "collapsed."

It turned out that the proposed axiom systems describing procedures, as a rule, lead to a contradiction. And in 1980, the article "A Critique of Hoar's Logic" was published, which, with the help of counterexamples, put an end to the Hoare's axiomatic basis.

In fact, the contradiction, in this case, is integrated into the concept of computability and is connected with the halting problem of the Turing machine. There is no algorithm in which we can determine from the input values whether it is looping or not. And this halting problem leads to inconsistency of axioms, and a contradictory axiom, unfortunately, allows us to prove absolutely any statement, both true and false.

If the axiomatic approach had proved itself, then it would be possible to fully automate the process of verifying programs, but at the moment this is impossible.

As a result, we get this: the complexity of development grows, the size of programs grows, the need for meta-programming, in particular, the use of "artificial intelligence", machine learning grows too, but we cannot guarantee that our AI or «special» program will work correctly, because we can not check it thoroughly.

Thus, there are 4 global problems that strongly affect the IT industry. And now there are only two options for resolving them: "run" forward as quickly as possible (as the Black Queen said) or do nothing at all. This is a business, therefore there will be a constant need for programmers who will write and verify anything, including AI.

Doubts that artificial intelligence will replace developers are completely justified. The reason is that any AI is not yet able to reflect, that is, to evaluate the results of its activities. So you need a human to understand whether the AI works correctly or not.

It's like a sci-fi movie, but one day we may find that our AI believes that humanity is not needed on our planet. And why not? We pollute the environment, waste our natural resources, reproduce uncontrollably, and love to destroy more than to create. Nevertheless, we consider for ourselves that this statement is false, but the AI can decide that it is true. But in fact, the global question of artificial intelligence is not even the truth and falsity of its judgments, but whether its statements are beneficial for us or not. And it is really difficult: you can't just check a statement, but you also need to keep the benefit in mind.

Since errors can occur in the logic of artificial intelligence, it is too early to say that in the future it will be applied in highly dangerous industries.

The main problem is that in the process of developing such systems, we sometimes forget that for a catastrophe it is not necessary to entrust AI to control a nuclear power plant. To arrange an apocalypse, the AI may, for example, mistakenly turn off electricity all over the planet. Or turn off all elevators at one time. Or order a pizza. It is almost impossible to assess the consequences of any error on such a scale; information complexity does not give us this understanding.

And if people themselves create destructive things for the world, for example, nuclear weapons, then with the computational power of artificial intelligence multiplied by the desire to create something outstanding, the world can be destroyed much faster - and in very nontrivial ways.

Читайте также